Language to Rewards for Robotic Skill Synthesis (2023.6.14 Google DeepMind)

Website

https://language-to-reward.github.io/

Problem

reward shaping in robotics

Solution

utilize LLMs to define reward parameters, and bridge the gap between high-level language instructions or corrections to low-level robot actions

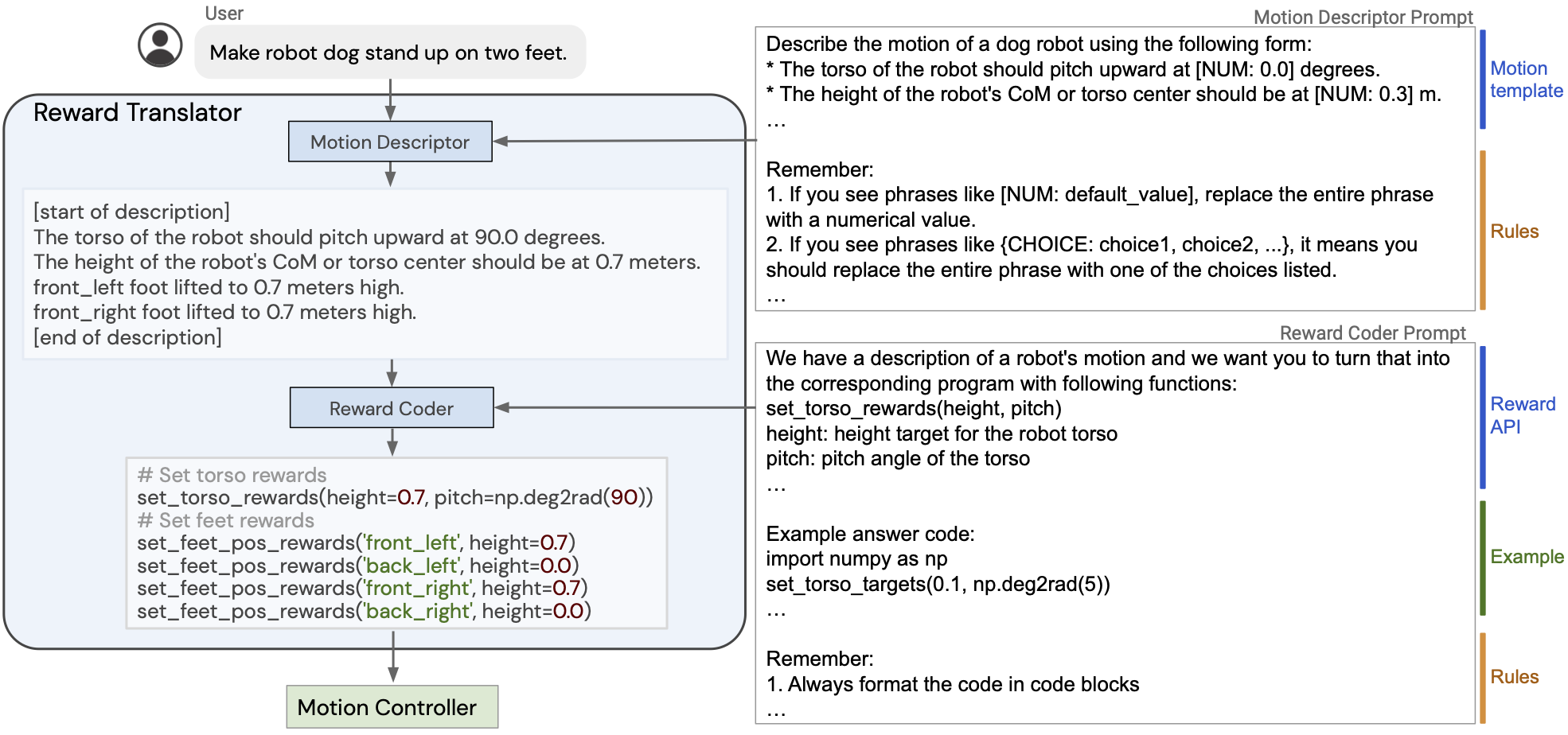

Reward translator + Motion controller

Reward translator:

User instructions + Motion template and rules → Motion description

Motion description + Reward Coder Prompt → Reward function

The transformation is done by LLM

Keypoints:

-

Step by step thinking:

Not directly generate codes but firstly generate description and then generate codes

-

Template:

Directly generating codes is difficult for LLM. But it is much more easier to complete a template

Contributions

Firstly apply the LLM to reward shaping in for robotic skill synthesis

Weaknesses

- Still much work(template design) need to be done by human.

- Constraining the reward design space helps improve stability of the system while sacrifices some flexibility.

Experiments

Environment: MuJoCo MPC

Underlying LLM: GPT-4

2 robots: quadrupted robot(locomotion) and dexterous manipulator(manipulation)

Comparison and ablation experiment:

Reflection

- learn to tell a good story.

- If you have a novel idea, but it does not work well. Maybe you can try to take a step back (i.e. template in this paper) to let it work temporarily. Figure out how to let it work later.